Working Mechanism of Google Gemini

Gemini 1.0: Google’s Multimodal AI Triumph and Its Capabilities’ Impact………………………..continued

Written by Team, latest AI News India

Google Gemini operates through a rigorous training process on a massive corpus of data. The model utilizes various neural network techniques to comprehend content, answer questions, generate text, and produce outputs. Notably, Gemini Large Language Models (LLMs) employ a Transformer model-based neural network architecture, optimized to process extensive contextual sequences across diverse data types, including text, audio, and video.

Efficient attention mechanisms within the Transformer decoder enhance the Gemini architecture’s ability to handle lengthy contextual sequences spanning different modalities. The training involves diverse multimodal and multilingual datasets, with advanced data filtering by Google Deep Mind for optimization. Targeted fine-tuning is employed during deployment to optimize models for specific use cases.

Both the training and inference phases benefit from Google’s TPU V5 chips, specialized AI accelerators designed for efficient training and deployment of large models. Gemini underwent extensive safety testing and mitigation measures, addressing risks such as bias and toxicity in Large Language Models (LLMs). Academic benchmarks across language, image, audio, video, and code domains were employed to ensure the effectiveness of Gemini models.

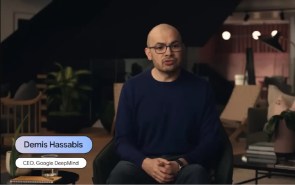

TEAM OF INTELLIGENT BRAINS FOR CREATING, Google Gemini

Designing the mind of artificial intelligence has begun

with the invention of Google Gemini / GPT 4

In the relentless pursuit of creating an artificial brain, the inception of Google Gemini and GPT-4 marks a groundbreaking chapter. As we embark on the journey of designing the very essence of intelligence, it’s crucial to recognize that we are forging a mind more potent than our own. A future awaits where this formidable intellect resides within a robot, armed with a wealth of global information delivered through the vast expanse of the internet.

However, with this power comes the responsibility to safeguard against any misuse or overreach. We stand at the crossroads of a miraculous achievement and a stern warning – the birth of an artificial brain. Google Gemini and Chat GPT serve as the pioneering pillars of this transformative architecture, setting the stage for a competition where these minds evolve beyond human control.

As we witness the ascent of these powerful intellects, we must tread carefully, ensuring that humanity maintains its dominion over the information these minds possess. The artificial brain stands as both a marvel and a cautionary tale, urging us to navigate this frontier with thoughtful consideration and a vigilant stride forward.

for detailed information, please watch the

YouTube Channel The AIGRID‘s Video:

=========================================================================

Technical Terms Explanation:

PaLM 2 (Pathways Language Model 2): PaLM 2 is the predecessor to Gemini, Google’s earlier language model released on May 10th, 2023. It represented a significant milestone in Google’s AI advancements before the introduction of Gemini. However, Gemini surpassed PaLM 2 in capabilities and integration into various Google technologies. PaLM 2 contributed to the foundation of Google’s expertise in large language models, paving the way for the development of more advanced models like Gemini.

Multi-Model Understanding: The ability of Gemini to comprehend and process information across various data types, such as text, code, audio, images, and video simultaneously.

Boundaries of AI: Gemini’s capacity to push the limits of what AI can achieve, showcasing unprecedented efficiency in problem-solving.

Multi-Modal Capabilities: Gemini’s proficiency in handling different modes of data, enabling cross-model reasoning and versatile problem-solving.

Google Deep Mind: Alphabet’s AI research and development unit responsible for the creation of Gemini.

OCR (Optical Character Recognition): Gemini’s elimination of the need for external OCR tools through its image understanding and recognition capabilities.

Multilingual Capabilities: Gemini’s ability to operate across various languages, facilitating tasks like translation and comprehension in more than 100 languages.

Model Sizes (Ultra, Pro, Nano): Different configurations of Gemini tailored for specific tasks, including highly complex computations (Ultra), broad range of tasks (Pro), and on-device efficiency (Nano).

Responsibility in Development: Google’s commitment to ethical AI practices, including extensive evaluation to mitigate biases and potential harms in Gemini’s deployment.

Transformer Model:

A neural network architecture used in natural language processing tasks is known for its ability to process sequential data efficiently.

Attention Mechanisms:

Techniques used in neural networks to selectively focus on specific parts of input data, enhancing the model’s ability to process relevant information.

TPU V5 Chips:

Tensor Processing Unit (TPU) V5 chips are specialized hardware accelerators designed by Google for the efficient processing of machine learning models.

Bias and Toxicity Mitigation:

Measures were undertaken to identify and reduce bias and potentially harmful content in models, ensuring ethical and responsible AI deployment.

Multimodal Reasoning:

The ability of a model to reason and generate outputs by integrating information from different modalities, such as text, image, audio, and video

Pingback: Gemini 1.0: Google's Multimodal AI Triumph and Its Capabilities’ Impact - Latest AI News India